Norman Sanders

Cambridge Computer Lab Ring, William Gates Building, Cambridge, England

ProjX, Walnut Tree Cottage, Tattingstone Park, Ipswich, Suffolk IP9 2NF, England

Abstract

This paper is a discussion of the early days of CAM-CAD at the Boeing Company, covering the period approximately 1956 to 1965. This period saw probably the first successful industrial application of ideas that were gaining ground during the very early days of the computing era. Although the primary goal of the CAD activity was to find better ways of building the 727 airplane, this activity led quickly to the more general area of computer graphics, leading eventually to today’s picture-dominated use of computers.

Keywords: CAM, CAD, Boeing, 727 airplane, numerical-control.

1. Introduction to Computer-Aided Design and Manufacturing

Some early attempts at CAD and CAM systems occurred in the 1950s and early 1960s. We can trace the beginnings of CAD to the late 1950s when Dr. Patrick J. Hanratty developed Pronto, the first commercial numerical-control (NC) programming system. In 1960, Ivan Sutherland at MIT's Lincoln Laboratory created Sketchpad, which demonstrated the basic principles and feasibility of computer-aided technical drawing.

There seems to be no generally agreed date or place where Computer-Aided Design and Manufacturing saw the light of day as a practical tool for making things. However, I know of no earlier candidate for this role than Boeing’s 727 aircraft. Certainly the dates given in the current version of Wikipedia are woefully late; ten years or so.

So, this section is a description of what we did at Boeing from about the mid-fifties to the early sixties. It is difficult to specify precisely when this project started – as with most projects. They don’t start, but having started they can become very difficult to finish. But at least we can talk in terms of mini eras, approximate points in time when ideas began to circulate and concrete results to emerge.

Probably the first published ideas for describing physical surfaces mathematically was Roy Liming’s Practical Analytic Geometry with Applications to Aircraft, Macmillan, 1944. His project was the Mustang fighter. However, Liming was sadly way ahead of his time; there weren’t as yet any working computers or ancillary equipment to make use of his ideas. Luckily, we had a copy of the book at Boeing, which got us off to a flying start. We also had a mighty project to try our ideas on – and a team of old B-17/29 engineers who by now were running the company, rash enough to allow us to commit to an as yet unused and therefore unproven technology.

Computer-aided manufacturing (CAM) comprises the use of computer-controlled manufacturing machinery to assist engineers and machinists in manufacturing or prototyping product components, either with or without the assistance of CAD. CAM certainly preceded CAD and played a pivotal role in bringing CAD to fruition by acting as a drafting machine in the very early stages. All early CAM parts were made from the engineering drawing. The origins of CAM were so widespread that it is difficult to know whether any one group was aware of another. However, the NC machinery suppliers, Kearney & Trecker etc, certainly knew their customers and would have catalysed their knowing one another, while the Aero-Space industry traditionally collaborated at the technical level however hard they competed in the selling of airplanes.

2. Computer-Aided Manufacturing (CAM) in the Boeing Aerospace Factory in Seattle

(by Ken McKinley)

The world’s first two computers, built in Manchester and Cambridge Universities, began to function as early as 1948 and 1949 respectively, and were set to work to carry out numerical computations to support the solution of scientific problems of a mathematical nature. Little thought, if any, was entertained by the designers of these machines to using them for industrial purposes. However, only seven years later the range of applications had already spread out to supporting industry, and by 1953 Boeing was able to order a range of Numerically-Controlled machine tools, requiring computers to transform tool-makers’ instructions to machine instructions. This is a little remembered fact of the early history of computers, but it was probably the first break of computer application away from the immediate vicinity of the computer room.

The work of designing the software, the task of converting the drawing of a part to be milled to the languages of the machines, was carried out by a team of about fifteen people from Seattle and Wichita under my leadership. It was called the Boeing Parts-Programming system, the precursor to an evolutionary series of Numerical Control languages, including APT – Automatically Programmed Tooling, designed by Professor Doug Ross of MIT. The astounding historical fact here is that this was among the first ever computer compilers. It followed very closely on the heels of the first version of FORTRAN. Indeed it would be very interesting to find out what, if anything preceded it.

As early as it was in the history of the rise of computer languages, members of the team were already aficionados of two rival contenders for the job, FORTRAN on the IBM 704 in Seattle, and COBOL on the 705 in Wichita. This almost inevitably resulted in the creation of two systems (though they appeared identical to the user): Boeing and Waldo, even though ironically neither language was actually used in the implementation. Remember, we were still very early on in the development of computers and no one yet had any monopoly of wisdom in how to do anything.

The actual programming of the Boeing system was carried out in computer machine language rather than either of the higher-level languages, since the latter were aimed at a very different problem area to that of determining the requirements of machine tools.

A part of the training of the implementation team consisted of working with members of the Manufacturing Department, probably one of the first ever interdisciplinary enterprises involving computing. The computer people had to learn the language of the Manufacturing Engineer to describe aluminium parts and the milling machine processes required to produce them. The users of this new language were to be called Parts Programmers (as opposed to computer programmers).

A particularly tough part of the programming effort was to be found in the “post processors”, the detailed instructions output from the computer to the milling machine. To make life interesting there was no standardisation between the available machine tools. Each had a different physical input mechanism; magnetic tape, analog or digital, punched Mylar tape or punched cards. They also had to accommodate differences in the format of each type of data. This required lots of discussion with the machine tool manufacturers - all very typical of a new industry before standards came about.

A memorable sidelight, just to make things even more interesting, was that Boeing had one particular type of machine tool that required analog magnetic tape as input. To produce it the 704 system firstly punched the post processor data into standard cards. These were then sent from the Boeing plant to downtown Seattle for conversion to a magnetic tape, then back to the Boeing Univac 1103A for conversion from magnetic to punched tape, which was in turn sent to Wichita to produce analog magnetic tape. This made the 1103A the world’s largest, most expensive punched tape machine. As a historical footnote, anyone brought up in the world of PCs and electronic data transmission should be aware of what it was like back in the good old days!

Another sidelight was that detecting and correcting parts programming errors was a serious problem, both in time and material. The earliest solution was to do an initial cut on wood or plastic foam, or on suitable machine tools, to replace the cutter with a pen or diamond scribe to ‘draw’ the part. Thus the first ever use of an NC machine tool as a computer-controlled drafting machine, a technique vital later to the advent of Computer-Aided Design.

Meanwhile the U. S. Air Force recognised that the cost and complication of the diverse solutions provided by their many suppliers of Numerical Control equipment was a serious problem. Because of the Air Force’s association with MIT they were aware of the efforts of Professor Doug Ross to develop a standard NC computer language. Ken McKinley, as the Boeing representative, spent two weeks at the first APT (Automatic Programmed Tooling) meeting at MIT in late 1956, with representatives from many other aircraft-related companies, to agree on the basic concepts of a common system where each company would contribute a programmer to the effort for a year. Boeing committed to support mainly the ‘post processor’ area. Henry Pinter, one of their post-processor experts, was sent to San Diego for a year, where the joint effort was based. As usually happened in those pioneering days it took more like 18 months to complete the project. After that we had to implement APT in our environment at Seattle.

Concurrently with the implementation we had to sell ourselves and the users on the new system. It was a tough sell believe me, as Norm Sanders was to discover later over at the Airplane Division. Our own system was working well after overcoming the many challenges of this new technology, which we called NC. The users of our system were not anxious to change to an unknown new language that was more complex. But upper management recognized the need to change, not least because of an important factor, the imminence of another neophytic technology called Master Dimensions.

3. Computer-Aided Design (CAD) in the Boeing Airplane Division in Renton

(by Norman Sanders)

The year was 1959. I had just joined Boeing in Renton, Washington, at a time when engineering design drawings the world over were made by hand, and had been since the beginning of time; the definition of every motorcar, aircraft, ship and mousetrap consisted of lines drawn on paper, often accompanied by mathematical calculations where necessary and possible. What is more, all animated cartoons were drawn by hand. At that time, it would have been unbelievable that what was going on in the aircraft industry would have had any effect on The Walt Disney Company or the emergence of the computer games industry. Nevertheless, it did. Hence, this is a strange fact of history that needs a bit of telling.

I was very fortunate to find myself working at Boeing during the years following the successful introduction of its 707 aircraft into the world’s airlines. It exactly coincided with the explosive spread of large computers into the industrial world. A desperate need existed for computer power and a computer manufacturer with the capacity to satisfy that need. The first two computers actually to work started productive life in 1948 and 1949; these were at the universities of Manchester and Cambridge in England. The Boeing 707 started flying five years after that, and by 1958, it was in airline service. The stage was set for the global cheap travel revolution. This took everybody by surprise, not least Boeing. However, it was not long before the company needed a shorter-takeoff airplane, namely the 727, a replacement for the Douglas DC-3. In time, Boeing developed a smaller 737, and a large capacity airplane – the 747. All this meant vast amounts of computing and as the engineers got more accustomed to using the computer there was no end to their appetite.

And it should perhaps be added that computers in those days bore little superficial similarity to today’s computers; there were certainly no screens or keyboards! Though the actual computing went at electronic speeds, the input-output was mechanical - punched cards, magnetic tape and printed paper. In the 1950s, the computer processor consisted of vacuum tubes, the memory of ferrite core, while the large-scale data storage consisted of magnetic tape drives. We had a great day if the computer system didn’t fail during a 24 hour run; the electrical and electronic components were very fragile.

We would spend an entire day preparing for a night run on the computer. The run would take a few minutes and we would spend the next day wading through reams of paper printout in search of something, sometimes searching for clues to the mistakes we had made. We produced masses of paper. You would not dare not print for fear of letting a vital number escape. An early solution to this was faster printers. About 1960 Boeing provided me with an ANalex printer. It could print one thousand lines a minute! Very soon, of course, we had a row of ANalex printers, wall to wall, as Boeing never bought one of anything. The timber needed to feed our computer printers was incalculable.

4. The Emergence of Computer Plots

With that amount of printing going on it occurred to me to ask the consumers of printout what they did with it all. One of the most frequent answers was that they plotted it. There were cases of engineers spending three months drawing curves resulting from a single night’s computer run. A flash of almost heresy then struck my digital mind. Was it possible that we could program a digital computer to draw (continuous) lines? In the computing trenches at Boeing we were not aware of the experimentation occurring at research labs in other places. Luckily at Boeing we were very fortunate at that time to have a Swiss engineer in our computer methods group who could both install hardware and write software for it; he knew hardware and software, both digital and analog. His name was Art Dietrich. I asked Art about it, which was to me the unaskable; to my surprise Art thought it was possible. So off he went in search of a piece of hardware that we could somehow connect to our computer that could draw lines on paper.

Art found two companies that made analog plotters that might be adaptable. One company was Electro Instruments in San Diego and the other was Electronic Associates in Long Branch, New Jersey. After yo-yoing back and forth, we chose the Electronic Associates machine. The machine could draw lines on paper 30x30 inches, at about twenty inches per second. It was fast! But as yet it hadn’t been attached to a computer anywhere. Moreover, it was accurate - enough for most purposes. To my knowledge, this was the first time anyone had put a plotter in the computer room and produced output directly in the form of lines. It could have happened elsewhere, though I was certainly not aware of it at the time. There was no software, of course, so I had to write it myself. The first machine ran off cards punched as output from the user programs, and I wrote a series of programs: Plot1, Plot2 etc. Encouraged by the possibility of selling another machine or two around the world, the supplier built a faster one running off magnetic tape, so I had to write a new series of programs: Tplot1, Tplot2, etc, (T for tape). In addition, the supplier bought the software from us - Boeing’s first software sale!

While all this was going on we were pioneering something else. We called it Master Dimensions. Indeed, we pioneered many computing ideas during the 1960s. At that time Boeing was probably one of the leading users of computing worldwide and it seemed that almost every program we wrote was a brave new adventure. Although North American defined mathematically the major external surfaces of the wartime Mustang P-51 fighter, it could not make use of computers to do the mathematics or to construct it because there were no computers. An account of this truly epochal work appears in Roy Liming’s book.

By the time the 727 project was started in 1960, however, we were able to tie the computer to the manufacturing process and actually define the airplane using the computer. We computed the definition of the outer surface of the 727 and stored it inside the computer, making all recourse to the definition via a computer run, as opposed to an engineer looking at drawings using a magnifying glass. This was truly an industrial revolution.

Indeed, when I look back on the history of industrial computing as it stood fifty years ago I cringe with fear. It should never have been allowed to happen, but it did. And the reason why it did was because we had the right man, Grant W. Erwin Jr, in the right place, and he was the only man on this planet who could have done it. Grant was a superb leader – as opposed to manager – and he knew his stuff like no other. He knew the mathematics, Numerical Analysis, and where it didn’t exist he created new methods. He was loved by his team; they would work all hours and weekends without a quibble whenever he asked them to do so. He was an elegant writer and inspiring teacher. He knew what everyone was doing; he held the plan in his head. If any single person can be regarded as the inventor of CAD it was Grant. Very sadly he died, at the age of 94, just as the ink of this chapter was drying.

When the Master Dimensions group first wrote the programs, all we could do was print numbers and draw plots on 30x30 inch paper with our novel plotter. Mind-blowing as this might have been it did not do the whole job. It did not draw full scale, highly accurate engineering lines. Computers could now draw but they could not draw large pictures or accurate ones or so we thought.

5. But CAM to the Rescue!

Now there seems to be a widely-held belief that computer-aided design (CAD) preceded computer-aided manufacturing (CAM). All mention of the topic carries the label CAD-CAM rather than the reverse, as though CAD led CAM. However, this was not the case, as comes out clearly in Ken McKinley’s section above. Since both started in the 1956-1960 period, it seems a bit late in the day now to raise an old discussion. However, there may be a few people around still with the interest and the memory to try to get the story right. The following is the Boeing version, at least, as remembered by some long retired participants.

5.1 Numerical Control Systems

The Boeing Aerospace division began to equip its factory about 1956 with NC machinery. There were several suppliers and control systems, among them Kearney & Trecker, Stromberg-Carlson and Thompson Ramo Waldridge (TRW). Boeing used them for the production of somewhat complicated parts in aluminium, the programming being carried out by specially trained programmers. I hasten to say that these were not computer programmers; they were highly experienced machinists known as parts programmers. Their use of computers was simply to convert an engineering drawing into a series of simple steps required to make the part described. The language they used was similar in principle to basic computer languages in that it required a problem to be analyzed down to a series of simple steps; however, the similarity stopped right there. An NC language needs commands such as select tool, move tool to point (x,y), lower tool, turn on coolant. The process required a deep knowledge of cutting metal; it did not need to know about memory allocation or floating point.

It is important to recognize that individual initiative from below very much characterized the early history of computing - much more than standard top-down managerial decisions. Indeed, it took an unconscionable amount of time before the computing bill reached a level of managerial attention. It should not have been the cost, it should have been the value of computing that brought management to the punch. But it wasn’t. I think the reason for that was that we computer folk were not particularly adept at explaining to anyone beyond our own circles what it was that we were doing. We were a corporate ecological intrusion which took some years to adjust to.

5.2 Information Consolidation at Boeing

It happened that computing at Boeing started twice, once in engineering and once in finance. My guess is that neither group was particularly aware of the other at the start. It was not until 1965 or so, after a period of conflict, that Boeing amalgamated the two areas, the catalyst being the advent of the IBM 360 system that enabled both types of computing to cohabit the same hardware. The irony here was that the manufacturing area derived the earliest company tangible benefits from computing, but did not have their own computing organization; they commissioned their programs to be written by the engineering or finance departments, depending more or less on personal contacts out in the corridor.

As Ken McKinley describes above, in the factory itself there were four different control media; punched Mylar tape, 80-column punched cards, analog magnetic tape and digital magnetic tape. It was rather like biological life after the Cambrian Explosion of 570 million years ago – on a slightly smaller scale. Notwithstanding, it worked! Much investment had gone into it. By 1960, NC was a part of life in the Boeing factory and many other American factories. Manufacturing management was quite happy with the way things were and they were certainly not looking for any more innovation. ‘Leave us alone and let’s get the job done’ was their very understandable attitude. Nevertheless, modernisation was afoot, and they embraced it.

The 1950s was a period of explosive computer experimentation and development. In just one decade, we went from 1K to 32K memory, from no storage backup at all to multiple drives, each handling a 2,400-foot magnetic tape, and from binary programming to Fortran 1 and COBOL. At MIT, Professor Doug Ross, learning from the experience of the earlier NC languages, produced a definition for the Automatically Programmed Tooling (APT) language, the intention being to find a modern replacement for the already archaic languages that proliferated the 1950s landscape. How fast things were beginning to move suddenly, though it didn’t seem that way at the time.

5.3 New Beginnings

Since MIT had not actually implemented APT, the somewhat loose airframe manufacturers’ computer association got together to write an APT compiler for the IBM 7090 computers in 1961. Each company sent a single programmer to Convair in San Diego and it took about a year to do the job, including the user documentation. This was almost a miracle, and was largely due to Professor Ross’s well-thought through specification.

When our representative, Henry Pinter, returned from San Diego, I assumed the factory would jump on APT, but they didn’t. At the Thursday morning interdepartmental meetings, whenever I said, “APT is up and running folks, let’s start using it”, Don King from Manufacturing would say, “but APT don’t cut no chips”. (That’s how we talked up there in the Pacific Northwest.) He was dead against these inter-company initiatives; he daren’t commit the company to anything we didn’t have full control over. However, eventually I heard him talking. The Aerospace Division (Ed Carlberg and Ken McKinley) were testing the APT compiler but only up to the point of a printout; no chips were being cut because Aerospace did not have a project at that time. So I asked them to make me a few small parts and some chips swept up from the floor, which they kindly did. I secreted the parts in my bag and had my secretary tape the chips to a piece of cardboard labeled ‘First ever parts cut by APT’. At the end of the meeting someone brought up the question of APT. ‘APT don’t cut no chips’ came the cry, at which point I pulled out my bag from under the table and handed out the parts for inspection. Not a word was spoken - King’s last stand. (That was how we used to make decisions in those days.)

These things happened in parallel with Grant Erwin’s development of the 727-CAD system. In addition, one of the facilities of even the first version of APT was to accept interpolated data points from CAD which made it possible to tie the one system in with the other in what must have been the first ever CAM-CAD system. When I look back on this feature alone nearly fifty years later I find it nothing short of miraculous, thanks to Doug Ross’s deep understanding of what the manufacturing world would be needing. Each recourse to the surface definition was made in response to a request from the Engineering Department, and each numerical cut was given a running Master Dimensions Identifier (MDI) number. This was not today’s CAM-CAD system in action; again, no screen, no light pen, no electronic drawing. Far from it; but it worked! In the early 1960s the system was a step beyond anything that anyone else seemed to be doing - you have to start somewhere in life.

6. Developing Accurate Lines

An irony of history was that the first mechanical movements carried out by computers were not a simple matter of drawing lines; they were complicated endeavors of cutting metal. The computer-controlled equipment was vast multi-ton machines spraying aluminum chips in all directions. The breakthrough was to tame the machines down from three dimensions to two, which happened in the following extraordinary way. It is perhaps one of the strangest events in the history of computing and computing graphics, though I don’t suppose anyone has ever published this story. Most engineers know about CAD; however, I do not suppose anyone outside Boeing knows how it came about.

6.1 So, from CAM to CAD

Back to square one for a moment. As soon as we got the plotter up and running, Art Dietrich showed some sample plots to the Boeing drafting department management. Was the plotting accuracy good enough for drafting purposes? The answer - a resounding No! The decision was that Boeing would continue to draft by hand until the day someone could demonstrate something that was superior to what we were able to produce. That was the challenge. However, how could we meet that challenge? Boeing would not commit money to acquiring a drafting machine (which did not exist anyway) without first subjecting its output to intense scrutiny. Additionally, no machine tool company would invest in such an expensive piece of new equipment without an order or at least a modicum of serious interest. How do you cut this Gordian knot?

In short, at that time computers could master-mind the cutting of metal with great accuracy using three-dimensional milling machines. Ironically, however, they could not draw lines on paper accurately enough for design purposes; they could do the tough job but not the easy one.

However, one day there came a blinding light from heaven. If you can cut in three dimensions, you can certainly scratch in two. Don’t do it on paper; do it on aluminium. It had the simplicity of the paper clip! Why hadn’t we thought of that before? We simply replaced the cutter head of the milling machine with a tiny diamond scribe (a sort of diamond pen) and drew lines on sheets of aluminium. Hey presto! The computer had drawn the world’s first accurate lines. This was done in 1961.

The next step was to prove to the 727 aircraft project manager that the definition that we had of the airplane was accurate, and that our programs worked. To prove it they gave us the definition of the 707, an aircraft they knew intimately, and told us to make nineteen random drawings (canted cuts) of the wing using this new idea. This we did. We trucked the inscribed sheets of aluminium from the factory to the engineering building and for a month or so engineers on their hands and knees examined the lines with microscopes. The Computer Department held its breath. To our knowledge this had never happened before. Ever! Anywhere! We ourselves could not be certain that the lines the diamond had scribed would match accurately enough the lines drawn years earlier by hand for the 707. At the end of the exercise, however, industrial history emerged at a come-to-God meeting. In a crowded theatre the chief engineer stood on his feet and said simply that the design lines that the computer had produced had been under the microscope for several weeks and were the most accurate lines ever drawn - by anybody, anywhere, at any time. We were overjoyed and the decision was made to build the 727 with the computer. That is the closest I believe anyone ever came to the birth of Computer-Aided Design. We called it Design Automation. Later, someone changed the name. I do not know who it was, but it would be fascinating to meet that person.

6.2 CAM-CAD Takes to the Air

Here are pictures of the first known application of CAM-CAD. The first picture is that of the prototype of the 727. Here you can clearly see the centre engine inlet just ahead of the tail plane. Seen from the front it is elliptical, as can be seen from the following sequence of manufacturing stages:-

(Images of the manufacturing stages of the 727 engine inlet are shown here)

6.3 An Unanticipated Extra Benefit

One of the immediate, though unanticipated, benefits of CAD was transferring detailed design to subcontractors. Because of our limited manufacturing capacity, we subcontracted a lot of parts, including the rear engine nacelles (the covers) to the Rohr Aircraft Company of Chula Vista in California. When their team came up to Seattle to acquire the drawings, we instead handed them boxes of data in punched card form. We also showed them how to write the programs and feed their NC machinery. Their team leader, Nils Olestein, could not believe it. He had dreamed of the idea but he never thought he would ever see it in his lifetime: accuracy in a cardboard box! Remember that in those days we did not have email or the ability to send data in the form of electronic files.

6.4 Dynamic Changes

The cultural change to Boeing due to the new CAD systems was profound. Later on we acquired a number of drafting machines from the Gerber Company, who now knew that there was to be a market in computer-controlled drafting, and the traditional acres of drafting tables began slowly to disappear. Hand drafting had been a profession since time immemorial. Suddenly its existence was threatened, and after a number of years, it no longer existed. That also goes for architecture and almost any activity involving drawing.

Shortly afterwards, as the idea caught on, people started writing CAD systems which they marketed widely throughout the manufacturing industry as well as in architecture. Eventually our early programs vanished from the scene after being used on the 737 and 747, to be replaced by standard CAD systems marketed by specialist companies. I suppose, though, that even today’s Boeing engineers are unaware of what we did in the early 1960s; generally, corporations are not noted for their memory.

Once the possibility of drawing with the computer became known, the idea took hold all over the place. One of the most fascinating areas was to make movie frames. We already had flight simulation; Boeing ‘flew’ the Douglas DC-8 before Douglas had finished building it. We could actually experience the airplane from within. We did this with analog computers rather than digital. Now, with digital computers, we could look at an airplane from the outside. From drawing aircraft one could very easily draw other things such as motorcars and animated cartoons. At Boeing we established a Computer Graphics Department around 1962 and by 1965 they were making movies by computer. (I have a video tape made from Boeing’s first ever 16mm movie if anyone’s interested.) Although slow and simple by today’s standards, it had become an established activity. The rest is part of the explosive story of computing, leading up to today’s marvels such as computer games, Windows interfaces, computer processing of film and all the other wonders of modern life that people take for granted. From non-existent to all-pervading within a lifetime!

7. The Cosmic Dice

Part of the excitement of this computer revolution that we have brought about in these sixty years was the unexpected benefits. To be honest, a lot of what we did, especially in the early days, was pure serendipity; it looked like a good idea at the time but there was no way we could properly justify it. I think had we had to undertake a solid financial analysis most of the projects would never have got off the ground and the computer industry would not have got anywhere near today’s levels of technical sophistication or profitability. Some of the real payoffs have been a result of the cosmic dice throwing us a seven. This happened already twice with the first 727.

The 727 rolled out in November, 1962, on time and within budget, and flew in April, 1963. The 727 project team were, of course, dead scared that it wouldn’t. But the irony is that it would not have happened had we not used CAD. During the early period, before building the first full-scale mockup, as the computer programs were being integrated, we had a problem fitting the wing to the wing-shaped hole in the body; the wing-body join. The programmer responsible for that part of the body program was yet another Swiss by name Raoul Etter. He had what appeared to be a deep bug in his program and spent a month trying to find it. As all good programmers do, he assumed that it was his program that was at fault. But in a moment of utter despair, as life was beginning to disappear down a deep black hole, he went cap in hand to the wing project to own up. “I just can’t get the wing data to match the body data, and time is no longer on my side.” “Show us your wing data. Hey where did you get this stuff?” “From the body project.” “But they’ve given you old data; you’ve been trying to fit an old wing onto a new body.” (The best time to make a design change is before you’ve actually built the thing!) An hour later life was restored and the 727 became a single numerical entity. But how would this have been caught had we not gone numerical? I asked the project. At full-scale mockup stage, they said. In addition to the serious delay what would the remake have cost? In the region of a million dollars. Stick that in your project analysis!

The second occasion was just days prior to roll-out. The 727 has leading-edge flaps, but at installation they were found not to fit. New ones had to be produced over night, again with the right data. But thanks to the NC machinery we managed it. Don’t hang out the flags before you’ve swept up the final chip.

8. A Fascinating Irony

This discussion is about using the computer to make better pictures of other things. At no time did any of us have the idea of using pictures to improve the way we ran computers. This had to wait for Xerox PARC, a decade or so later, to throw away our punched cards and rub our noses into a colossal missed opportunity. I suppose our only defence is that we were being paid to build airplanes not computers.

9. Conclusion

In summary, CAM came into existence during the late 1950s, catalyzing the advent of CAD in the early 1960s. This mathematical definition of line drawing by computers then radiated out in three principal directions with (a) highly accurate engineering lines and surfaces, (b) faster and more accurate scientific plotting and (c) very high-speed animation. Indeed, the world of today’s computer user consists largely of pictures; the interface is a screen of pictures; a large part of technology lessons at school uses computer graphics. And we must remember that the computers at that time were miniscule compared to the size of today’s PC in terms of memory and processing speed. We’ve come a long way from that 727 wing design.

n5321 | 2025年6月15日 23:23

In 1968 the Englishman, David Hibbitt, was a couple of years into his Ph.D. in solid mechanics at Brown University in Providence when he switched advisers to work with Pedro Marcal, a young assistant professor who'd arrived from London with two boxes of punch cards containing a version of the SAP finite element program. Pedro was trying to extend the program to model nonlinear problems including plasticity, large motions and deformations; David had recently discovered Fortran programming and found that he liked writing code, as well as the challenge of applying solid mechanics to engineering design.

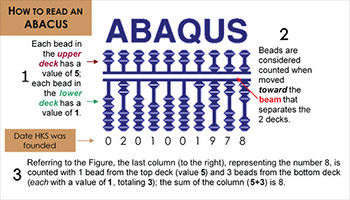

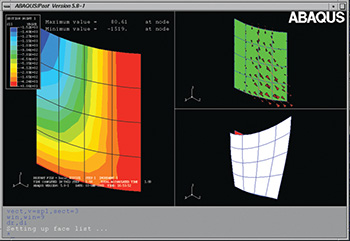

In 1968 the Englishman, David Hibbitt, was a couple of years into his Ph.D. in solid mechanics at Brown University in Providence when he switched advisers to work with Pedro Marcal, a young assistant professor who'd arrived from London with two boxes of punch cards containing a version of the SAP finite element program. Pedro was trying to extend the program to model nonlinear problems including plasticity, large motions and deformations; David had recently discovered Fortran programming and found that he liked writing code, as well as the challenge of applying solid mechanics to engineering design. So David and Bengt decided to try on their own. “Almost everyone whose advice we sought told us that we would fail,” David remembers.“There were already 22 viable FE programs out there, competing for industry business, and even the largest computers were too limited to do nonlinear calculations of practical size.” But they had enough in savings to feed their families and pay their mortgages for a year. And so the ABAQUS software was conceived. There’s a message in the company’s first logo, a stylized abacus calculator: its beads are set to the company’s official launch date of February 1, 1978 (2-1-1978).

So David and Bengt decided to try on their own. “Almost everyone whose advice we sought told us that we would fail,” David remembers.“There were already 22 viable FE programs out there, competing for industry business, and even the largest computers were too limited to do nonlinear calculations of practical size.” But they had enough in savings to feed their families and pay their mortgages for a year. And so the ABAQUS software was conceived. There’s a message in the company’s first logo, a stylized abacus calculator: its beads are set to the company’s official launch date of February 1, 1978 (2-1-1978). In those days HKS personnel would physically install their software at a customer's site whenever a new license was purchased. They’d bring the source code on tape to the customer's location, compile the program and make it work on that customer’s computer, run all the examples, and then check the printed output against microfiche copies of the results from previous versions of the code.

In those days HKS personnel would physically install their software at a customer's site whenever a new license was purchased. They’d bring the source code on tape to the customer's location, compile the program and make it work on that customer’s computer, run all the examples, and then check the printed output against microfiche copies of the results from previous versions of the code.

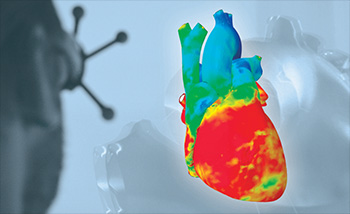

That “why” was reintroduced to David Hibbitt recently when Steve Levine, the leader of SIMULIA’s Living Heart Project, had the opportunity to put 3D glasses on him, so the Abaqus founder could view the company’s flagship 3Dmodel of a beating human heart, complete with bloodflow, electrical activation, cardiac cycles, and tissue response on the molecular level.

That “why” was reintroduced to David Hibbitt recently when Steve Levine, the leader of SIMULIA’s Living Heart Project, had the opportunity to put 3D glasses on him, so the Abaqus founder could view the company’s flagship 3Dmodel of a beating human heart, complete with bloodflow, electrical activation, cardiac cycles, and tissue response on the molecular level.

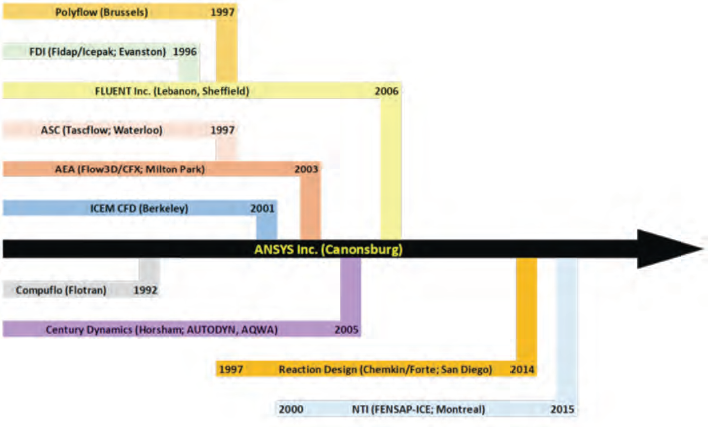

Much to my surprise, it seems there hasn't been much movement there. ANSYS still seems to be the leader for general simulation and multi-physics. NASTRAN still popular. Still no viable open-source solution.

The only new player seems to be COMSOL. Has anyone experience with it? Would it be worth a try for someone who knows ANSYS and NASTRAN well?